What?

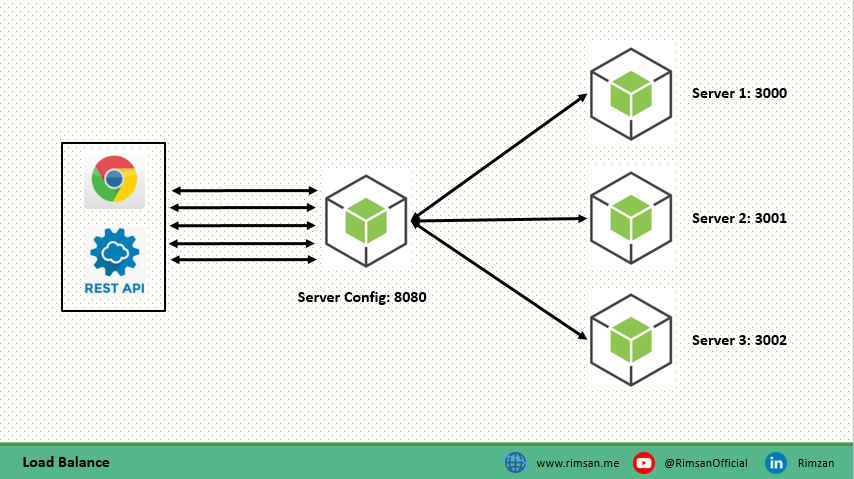

A load balancer is a device that acts as a reverse proxy and distributes network or application traffic across a number of servers. Load balancers are used to increase capacity (concurrent users) and reliability of applications.

If your website or application sees limited traffic, load balancing might not be necessary. However, as its popularity grows and traffic surges, your primary server might struggle to cope. A single NodeJS server isn't equipped to manage extremely high traffic volumes. While adding more machines can address this, a load balancer is essential to distribute traffic evenly across all your servers.

Load balancer: A load balancer functions like a traffic controller positioned before your application servers. It distributes client requests among all the servers that can handle them, optimizing speed and resource use. This ensures that no single server gets overwhelmed, preventing a drop in performance.

Using Express Web Server: There is a lot of advantage to an Express web server. If you are comfortable with NodeJS, you can implement your own Express base load balancer as shown in the following example.

autocannon npm i -g autocannon

concurrently npm i concurrently -g

mkdir load-balance

cd load-balance

npm init -y

npm i express axios

Create two file load-config.js for the load balancer server and index.js for

the application server.

Here filename is load-config.js

const express = require('express');

const path = require('path');

const app = express();

const axios = require('axios');

// Application servers

const servers = [

"http://localhost:3000",

"http://localhost:3001",

"http://localhost:3002"

]

// Track the current application

let current = 0;

// Receive new request

// Forward to application server

const handler = async (req, res) =>{

// Destructure following properties from request object

const { method, url, headers, body } = req;

// Select the current server to forward the request

const server = servers[current];

// Update track to select next server

current === (servers.length-1)? current = 0 : current++

try{

// Requesting to underlying application server

const response = await axios({

url: `${server}${url}`,

method: method,

headers: headers,

data: body

});

// Send back the response data

// from application server to client

res.send(response.data)

}

catch(err){

// Send back the error message

res.status(500).send("Server error!")

}

}

// When receive new request

// Pass it to handler method

app.use((req,res)=>{handler(req, res)});

// Listen on PORT 8080

app.listen(8080, err =>{

err ?

console.log("Failed PORT 8080"):

console.log("Load Balancer Server "

+ "on PORT 8080");

});

Here filename is index.js

const express = require('express');

const app1 = express();

const app2 = express();

const app3 = express();

// Handler

const handler = num => (req,res)=>{

const { method, url, headers, body } = req;

res.send('Response from server Test: ' + num);

}

// Receive request and pass to handler method

app1.get('*', handler(1)).post('*', handler(1));

app2.get('*', handler(2)).post('*', handler(2));

app3.get('*', handler(3)).post('*', handler(3));

// Start server on PORT 3000

app1.listen(3000, err =>{

err ?

console.log("Failed PORT 3000"):

console.log("Application Server PORT 3000");

});

// Start server on PORT 3001

app2.listen(3001, err =>{

err ?

console.log("Failed PORT 3001"):

console.log("Application Server PORT 3001");

});

// Start server on PORT 3002

app3.listen(3002, err =>{

err ?

console.log("Failed PORT 3002"):

console.log("Application Server PORT 3002");

});

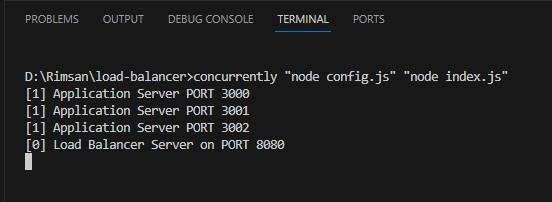

Open a command prompt on your project folder and run two scripts parallel using concurrently.

concurrently "node load-config.js" "node index.js"

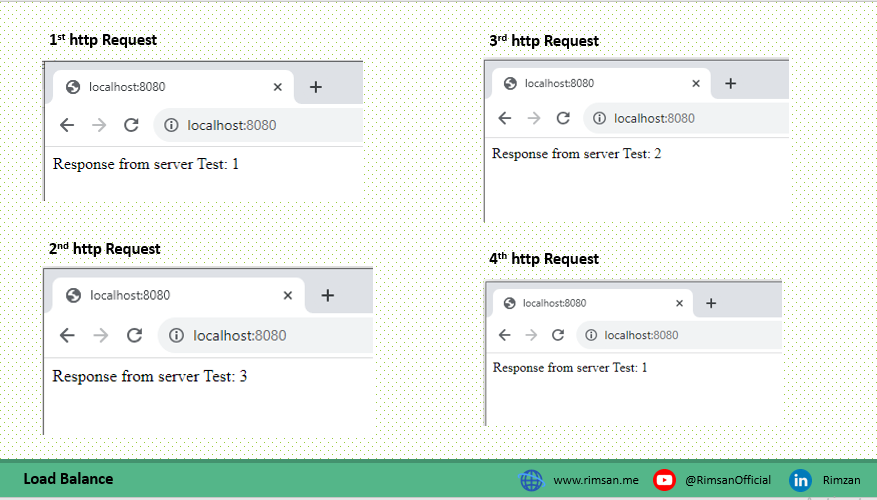

The above code starts with three Express apps, one on port 3000, 3001 and another on port 3002. The separate load balancer process should alternate amoung these three, sending one request to port 3000, the next request to port 3001, and the next one back to port 30002.

Now, open a browser and go to http://localhost:8080/ and make a few requests, we will see

the following output:

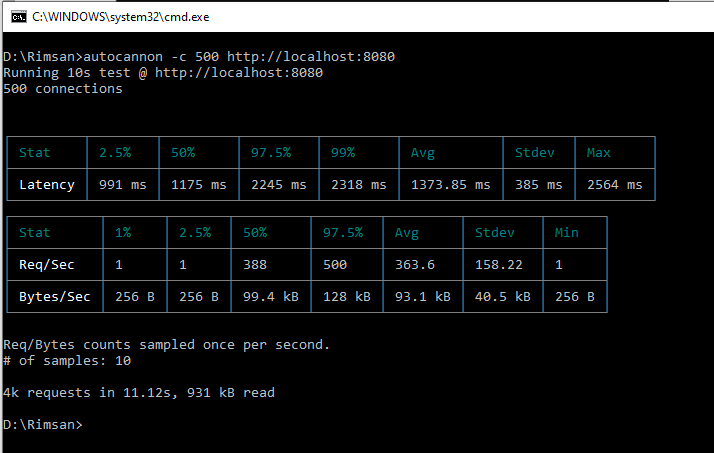

The above NodeJS application is launched on each core of our system. Where master process accepts the request and distributes across all worker. The performed in this case is shown below:

All the code has been uploaded on github (download).